Choosing the right pace for your lessons (thanks to queueing theory)

Hi everyone! I often see on the forums questions about how many lessons one should do every day, or people swamped by reviews and wondering how to get on top of it, etc.

I present to you the ultimate guide to choosing your lessons rate, based on the awesome power of queueing theory.

Final edit: the post below is correct, but only assuming a few things that are not true (same accuracy at all levels, and number of of level drops when answering wrong). I now built a webtool that uses your history of reviews to gather all your accuracies by level and compute all of the math below for you: https://castux.github.io/wanikani-stats/?key=your_key_here. This post is left here for those curious about how it works.

The results

The first thing you’ll need to know is your accuracy, that is your average percentage of correct reviews. You can find that for instance with the WKStats website.

Then, consider the following table. All the values (except time to burn) are based on a reference lesson rate of 1 lesson per day, which means you can either:

- Multiply all the values by your actual average lesson rate, and it will give you: average number of reviews per day and average queue size (plus the detail for apprentice items specifically).

- OR: set a target for yourself (either in reviews per day, or queue size), and deduce the corresponding lesson rate.

Examples:

- My accuracy is about 94%. I’ll worry only about the 94% line. I set myself to do about 20 lessons per day. That means I’ll have, on average: about 9.3 * 20 = 186 reviews per day (including 94 for apprentice items), and my average number of non-burned items will be 3824 (including 84 apprentice items).

- My accuracy is 85%. I follow the rule of thumb I saw on the forums of “no more than 100 apprentice items at once”. At the reference rate of 1 lesson per day, the apprentice queue size is 6.1, so I deduce that my lesson rate should be no more than 100 / 6.1 = 16.4 lessons per day. Note that reading the rest of the line, I realize that this will actually mean ~203 reviews per day!

- Maybe I’ll base my pacing on reviews instead. Still at 85% percent accuracy, I see that the reviews per day is 12.4 (for the reference 1 lesson per day). If I want to limit myself to 80 reviews per day, I deduce that my lesson rate should be 80 / 12.4 = 6.4 lessons per day.

The average time to burn does not depend on your lesson rate, only on your accuracy, so don’t multiply it, you can just read it directly from the table. Notice that if your accuracy is 100% (no mistakes ever), time to burn is simply the sum of the SRS delay times: 4 month + 1 month + 2 weeks + 1 week + 2 days + 1 day + 8 hours + 4 hours = 174.5 days.

| Accuracy | Total reviews per day | Apprentice reviews per day | Total queue size | Apprentice queue size | Time to burn (days) |

|---|---|---|---|---|---|

| 50% | 178.0 | 150.0 | 679.3 | 109.3 | 679 |

| 51% | 154.9 | 128.6 | 642.7 | 96.2 | 643 |

| 52% | 135.4 | 110.8 | 609.6 | 85.0 | 610 |

| 53% | 119.0 | 95.9 | 579.5 | 75.3 | 580 |

| 54% | 105.2 | 83.3 | 552.2 | 66.9 | 552 |

| 55% | 93.3 | 72.8 | 527.3 | 59.7 | 527 |

| 56% | 83.2 | 63.8 | 504.4 | 53.4 | 504 |

| 57% | 74.5 | 56.2 | 483.4 | 47.9 | 483 |

| 58% | 67.1 | 49.7 | 464.0 | 43.0 | 464 |

| 59% | 60.6 | 44.1 | 446.1 | 38.8 | 446 |

| 60% | 55.0 | 39.3 | 429.5 | 35.1 | 429 |

| 61% | 50.1 | 35.2 | 414.1 | 31.8 | 414 |

| 62% | 45.8 | 31.6 | 399.7 | 29.0 | 400 |

| 63% | 42.0 | 28.5 | 386.4 | 26.4 | 386 |

| 64% | 38.7 | 25.8 | 373.8 | 24.1 | 374 |

| 65% | 35.8 | 23.5 | 362.1 | 22.1 | 362 |

| 66% | 33.2 | 21.4 | 351.1 | 20.3 | 351 |

| 67% | 30.9 | 19.6 | 340.8 | 18.7 | 341 |

| 68% | 28.8 | 18.0 | 331.0 | 17.3 | 331 |

| 69% | 26.9 | 16.6 | 321.8 | 16.0 | 322 |

| 70% | 25.3 | 15.3 | 313.1 | 14.8 | 313 |

| 71% | 23.8 | 14.2 | 304.9 | 13.7 | 305 |

| 72% | 22.4 | 13.2 | 297.1 | 12.8 | 297 |

| 73% | 21.2 | 12.3 | 289.7 | 11.9 | 290 |

| 74% | 20.1 | 11.5 | 282.7 | 11.2 | 283 |

| 75% | 19.0 | 10.8 | 276.0 | 10.5 | 276 |

| 76% | 18.1 | 10.2 | 269.6 | 9.8 | 270 |

| 77% | 17.2 | 9.6 | 263.6 | 9.2 | 264 |

| 78% | 16.5 | 9.1 | 257.8 | 8.7 | 258 |

| 79% | 15.7 | 8.6 | 252.2 | 8.2 | 252 |

| 80% | 15.1 | 8.2 | 246.9 | 7.8 | 247 |

| 81% | 14.4 | 7.8 | 241.8 | 7.4 | 242 |

| 82% | 13.9 | 7.4 | 237.0 | 7.0 | 237 |

| 83% | 13.3 | 7.1 | 232.3 | 6.7 | 232 |

| 84% | 12.8 | 6.8 | 227.8 | 6.3 | 228 |

| 85% | 12.4 | 6.5 | 223.5 | 6.1 | 224 |

| 86% | 12.0 | 6.2 | 219.4 | 5.8 | 219 |

| 87% | 11.5 | 6.0 | 215.4 | 5.5 | 215 |

| 88% | 11.2 | 5.8 | 211.5 | 5.3 | 212 |

| 89% | 10.8 | 5.6 | 207.8 | 5.1 | 208 |

| 90% | 10.5 | 5.4 | 204.3 | 4.9 | 204 |

| 91% | 10.2 | 5.2 | 200.8 | 4.7 | 201 |

| 92% | 9.9 | 5.0 | 197.5 | 4.5 | 198 |

| 93% | 9.6 | 4.9 | 194.3 | 4.4 | 194 |

| 94% | 9.3 | 4.7 | 191.2 | 4.2 | 191 |

| 95% | 9.1 | 4.6 | 188.2 | 4.1 | 188 |

| 96% | 8.8 | 4.4 | 185.3 | 4.0 | 185 |

| 97% | 8.6 | 4.3 | 182.4 | 3.8 | 182 |

| 98% | 8.4 | 4.2 | 179.7 | 3.7 | 180 |

| 99% | 8.2 | 4.1 | 177.1 | 3.6 | 177 |

| 100% | 8.0 | 4.0 | 174.5 | 3.5 | 175 |

Here it is in graph form (again, for the reference lesson rate of 1 lesson per day):

We can notice that review rate blows up dramatically when accuracy decreases, but that at any accuracy, most of the reviews are apprentice. That makes sense, since bad accuracy means a lot of items fall back down (or stay) in apprentice levels.

For items in the system, it’s less dramatic, but we also notice that only a small portion are apprentice items. That also makes sense, since the higher levels have so much bigger SRS delays: most items are patiently waiting in the Master or Enlightened levels.

Finally, here is a Google Docs spreadsheet you can copy and toy with: simply change the lesson rate value in the yellow box to update the table and graphs.

Notes:

- You can, if you like, make separate computations for each type of item (radical, kanji, vocab), using the accuracy and lesson rate specifically for each item type, and then add the results together. It will be more precise.

- I assumed that accuracy is the same at every SRS level, which might not be true, but I don’t know if it’s possibly to get your accuracy split by level, and it would also make the formulas much bigger, without much benefit.

- This also assumes that you have studied long enough, so that you already started burning items. Before your first burn, the system is “ramping up” to its full capacity, and all the real numbers will be smaller than those indicated here.

- Last assumption: you do reviews immediately when they become available. In practice, there is usually a small delay (for instance if you review once per day only), but that would only increase the numbers by a little bit. We do assume however that when reviewing, you review all available items: if you do let items accumulate, either the system won’t be stable (see below), or it will add longer delays, which would need to be taken in account in the model.

The math

Assumptions

In order to apply a mathematical model, I made the assumption that the system is stable, that is: no level will accumulate items indefinitely: every item that comes in will come out eventually. This has the interesting consequence that your burn rate is exactly your lesson rate.

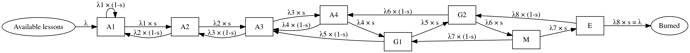

The entire Wanikani process is modeled as series of queues (one per SRS level), between which flow items (radicals, kanjis, vocabs):

The lesson rate is denoted λ.

All the flows are Poisson processes, and all the queues are M/D/∞ (which roughly means that items arrive in queues are random times, but once in the queue, they start being processed immediately, and stay there for a determined amount of time: the SRS delay for that level).

I denote the queues’ departure rates λ1 to λ8.

When an item exits a queue (ie. a level), it is reviewed immediately. That means that each queue’s departure rate is also that level’s review rate. After being reviewed, the item has a probability s of moving to the next level (correct review), and a probability (1-s) of moving down max two levels (incorrect review).

Because every queue is stable (first assumption), the total arrival rate equals the departure rate, for every queue. This gives this system of equations, solved using Maxima (λ’s are written as l):

The total review rate Lt is simply the sum of review rates of all levels. Lt is the review rate for apprentice items.

We notice that all these are proportional to λ, the chosen lesson rate.

For the queue sizes, we use Little’s Formula: the average size of a stable queue is the arrival rate (same as departure rate) multiplied by the average time spent in the queue by an item. Q = λ * T.

We know exactly the amount of time spent in a queue by an item: it’s the SRS delay for that level (written here in days), so we can solve for the average queue sizes:

q1 = l1 * 1/6,

q2 = l2 * 1/3,

q3 = l3 * 1,

q4 = l4 * 2,

q5 = l5 * 7,

q6 = l6 * 14,

q7 = l7 * 30,

q8 = l8 * 120

For the average number of apprentice items, we simply take the sum of the first four levels, and for the total number, the sum of all levels:

Qa = q1 + q2 + q3 + q4,

Qt = Qa + q5 + q6 + q7 + q8

These results are also proportional to λ, which is why we can simply give the values for λ = 1 item/day, and multiply everything according to the real rate.

Finally, for the average burn time, we can apply Little’s Formula to the entire system (which was assumed to be stable). The average amount of items in the whole system Qt equals the arrival rate λ times the average time spent in the system T. Qt = λ * T gives T = Qt / λ:

T = Qt / l

We see that this doesn’t depend on λ anymore, only on the accuracy s. A final note: because we assume that the system is stable, its departure rate (which is the burn rate) must be equal to the arrival rate (the lesson rate). Once the system has ramped up to capacity, you’ll burn items as fast as you learn them!

That’s it, folks! The ultimate power of maths! Now to do these reviews ![]()